Microsoft Defender ATP Streaming API

UPDATE April 19, 2020: MTP Schema Alignment

If I take one more step, I’ll be the farthest away from home I’ve ever been.

Sam, Lord of the Rings

In the past, we could consume the MDATP API ‘on demand’ (pull) by PowerShell for example.

We could even do advanced hunting queries via the API.

However, pulling the data out of MDATP might bring in some delay in one or the other scenario and the information from the advanced hunting results are limited to the last 30 days.

With the brand new ‘Streaming API’ (in preview), Microsoft is offering a new approach to make data from MDATP available outside of the portal.

Why would you like to export data from MDATP?

Well, as mentioned: some data-structures (advanced hunting) are only available for 30 days. You might want to keep it longer for retention purposes or you want to export it to a SIEM for further processing.

Anyway, let’s go one step further now and see how this looks in practice. When you visit your favorite security portal on securitycenter.windows.com, under ‘Interoperability’, you will recognize a new option called ‘Data export settings’:

Hammering on that, brings you to the page from which you can setup the new connections to foreign targets:

We are keen enough to immediately press on ‘Add data export’ settings. This then pops up the following dialog:

As you can see, we currently have the choice of two targets:

- Forward events to Azure Storage

- Forward events to Azure Event Hub

In this blog post, we will concentrate on the first choice, ‘Azure Storage’. In addition, you can check which event types you want to stream to your chosen target. Note that this is the same data schema as you have available in ‘advanced hunting’:

Since we found out now that we want to stream MDATP data to Azure Storage, we suddenly feel the basic need for a new Azure Storage account. So, lets switch to the Azure Portal:

After you hit ‘add’, you must fill out some settings to create a new storage account:

It’s fine to click then on ‘Review + create’ and then on ‘create’ to finish creating a storage account while leaving all other settings as is. Next, we need to register ‘Microsoft.insights’ as a ‘Resource Provider’. In my case this was already registered. Anyway, check here (in the Azure Portal):

Subscriptions > Your subscription > Resource Providers > Register to Microsoft.insights

Before you now go back to the MDATP portal, wait a second:

As we want to connect MDATP streaming API to this freshly created storage account, we need to somehow target this storage account. Therefore, we go to the properties of the storage account and look for the ‘storage account resource ID’:

We copy the ‘resource ID’ and switch back to MDATP and paste it there:

Press save and we are ready to go. Everything is setup and MDATP will start streaming to the Azure Account within the next few minutes.

The official documentation of what we have just done here (and also on how to connect to Event Hub) is available here.

But let’s proceed to see what we can do with that exported data now.

The first information I would like to give you, is that the data from MDATP is stored in a blob within the created storage account. If you start the Azure Storage Explorer from the search bar in the azure portal, you can browse the containers in the blob:

As you can see above, our well-known data schema from advanced hunting has arrived in the blob. When you browse through the containers, you will find a structure like this:

… and at the end of our click-folder-journey, we finally get to the ‘golden’ json which kind of looks like this:

Now, let’s add a little fun here. I thought it could be cool to index this Azure Storage blob and make it searchable with ‘Azure Search’. Since over two years now, ‘Azure Search’ can parse JSON blobs.

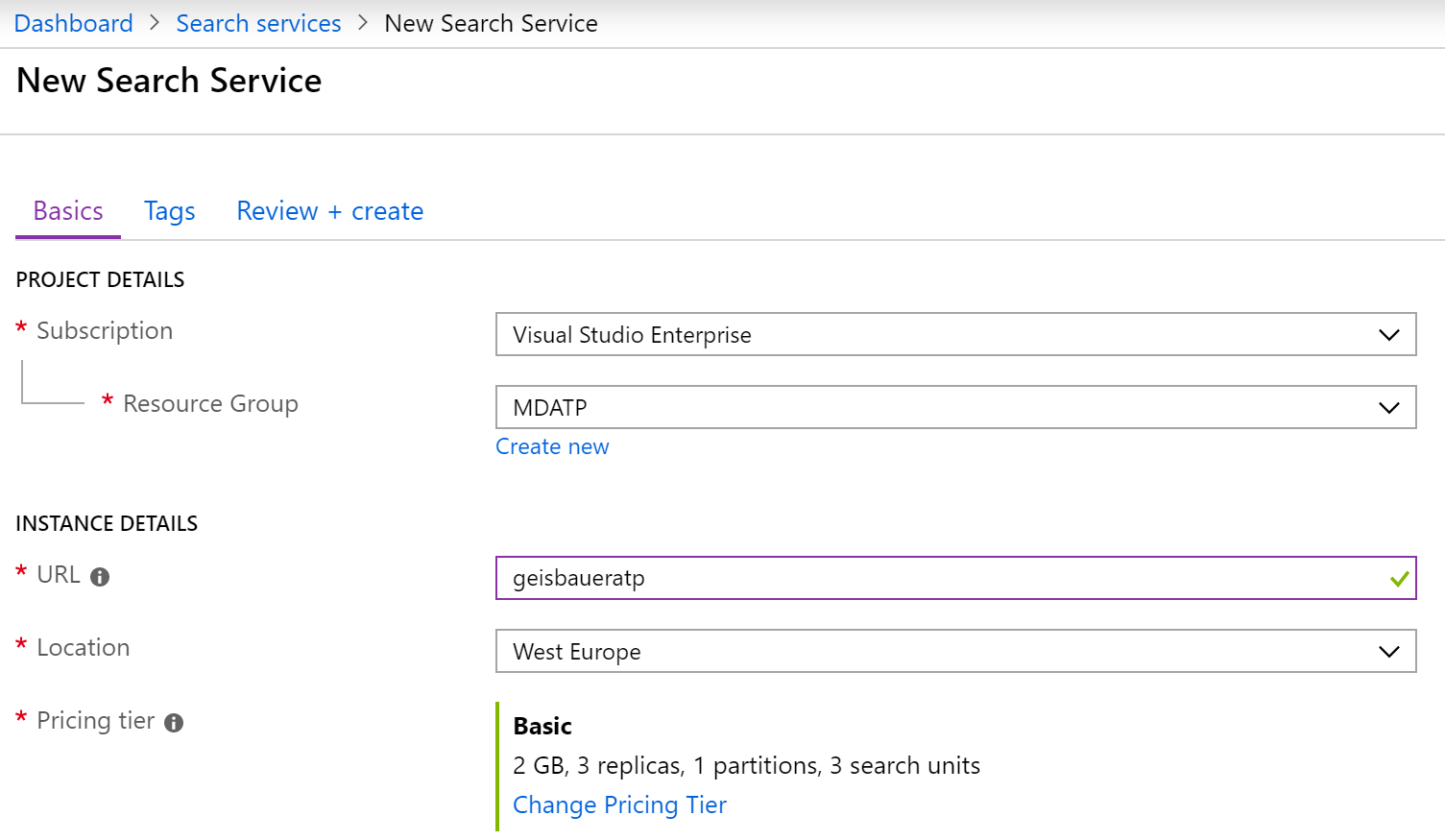

To get started with Azure Search, type its name in the search bar on the azure portal. Then add a new search service:

When its created, on the overview page click on ‘import data’:

Then choose ‘Azure Blob Storage’:

You then must give it a name, choose ‘content and metadata’, choose parsing mode ‘JSON’, point to the connection string of your storage account (just choose existing connection) and then choose the container. In my case, I will choose ‘insights-logs-advancedhunting-alertevents’:

Then, create it and skip the ‘cognitive search’ settings. Now, it is important that you expand ‘properties’ when you check which fields should be recognized by Azure Search:

Click next and schedule the indexer hourly. Then finish the creation. After a short while our index storage size grows:

Now click on ‘search explorer’ to start investigating. When you just click ‘search’, you get all results from the json:

But this is a powerful search engine. You can do many things with it to drill down your results. For example, you can search for a part of the ComputerName and select only the json properties, you want to display:

search=pf3tern&$select=properties/EventTime,properties/ComputerName,properties/Severity,properties/Category,properties/Title

Note that ‘properties’ has its own parameter-value set. That’s why you have to refer to values within ‘properties’ like I did it above. For more search hints check here:

This comes then to the following results:

Nice, isn’t it?

Conclusion

So, this ends our little journey out of the Shire. We have seen how to export data out of the MDATP realm to extend its availability (retention). We have also seen how easy it is to then make this data available for search through Azure Search. I guess we will see more use cases like this one, described here, in the future. I bet ‘Azure Sentinel’ will be a very interesting target for the streaming API, too. Thanks for reading!

Thanks for this. There is lots of info out there on how to get data out of ATP, but only yours has been helpful in figuring out how to query the data. Have you found any other ways to query the data?

Thanks, Dan

LikeLike

Sure, you can user the brand new MTP API: https://techcommunity.microsoft.com/t5/microsoft-365-defender/say-hello-to-the-new-microsoft-threat-protection-apis/ba-p/1669234

LikeLike